Expert interview with Milad, Head of Artificial Intelligence

What exactly are your tasks at NEURA Robotics?

I run the artificial intelligence (AI) department at NEURA Robotics and have been with the company since day one, along with our CEO, David Reger, and around twelve other people. Part of my job is to tackle novel and ambitious goals and turn them into viable projects. These ideas can come from David, other employees, or the AI team members.

Based on the task, I outline step-by-step projects that enable us to realize different versions of our final goal. For instance, imagine you want to have a conversation with a robot and have the robot understand and respond to you. AI is necessary for that purpose.

Of course, implementing these projects is not always easy, that is why we do our best to make them possible by generalizing them. If, for example, we build a robot to recognize and pick up a glass, we implement a generalized solution so that it can identify and pick up anything else, a plate, a bottle, a teddy, anything. That’s what it means to make a robot cognitive.

How has your experience been since your first day at NEURA Robotics?

The first time I spoke with David and heard about his super-ambitious goals, I was shocked. My team and I were going to be the ones to implement a substantial portion of these plans. Honestly, it kept me up at night for a while. It was the first time in the world that a company was trying something like this, and there were no guidelines or best practices available.

What concerned me the most was finding the right people to achieve our mission. But David kept us motivated, which was extremely helpful. Yes, we had good and bad days, but we tried learning as much as possible from unpleasant experiences to avoid repeating them. I found being flexible in the way you think is essential. Adaptability and relying on your and others’ expertise make everything possible.

How would you define artificial intelligence?

You can summarize AI with the word cognition. Artificial intelligence draws inspiration from the human brain. Humans’ intelligence is natural, so ingrained in us that we often don’t think about it.

With AI, we imitate the human brain mathematically to figure out how it works and transfer that knowledge to machines step-by-step. Neural networks help us develop an uncomplicated way to replicate neurons mathematically. In the last few years, new hardware development such as graphics processing units (GPU) has made it possible to create deep neural networks with many neurons that work better than engineering systems. Artificial intelligence means a machine can perceive, understand, listen, see its environment, plan its next actions, and execute it.

Why do we need artificial intelligence?

We need artificial intelligence to solve problems that other sophisticated technology cannot solve. For instance, in physics, if you want to design a car that moves fast, you can use physics equations so that the result is precise. Though, this will be only an approximation.

In robotics, we need to compensate for that approximation, and the only way to do that is through machine learning. With AI, you can create an extensive data set and pass it to the model, which understands it in ways you cannot even explain.

For example, if you want to recognize a cat in a picture without AI, you will have to describe it in your code: a cat has this type of eyes, this color, and it looks like this. But if you use another cat than the one you first chose, this method cannot recognize it. With AI, you can create a dataset of many cat images, train a simple deep learning model with it, and it will identify any cat it comes across. Think about how you learned about cats: as a child, you saw lots of cats or cat pictures, and if you asked your mother what those animals were, she would tell you “cats”, without explaining to you what a cat looks like. After a while, you learned to recognize any cat, independently of its color or size.

Can you give examples of some real-world applications?

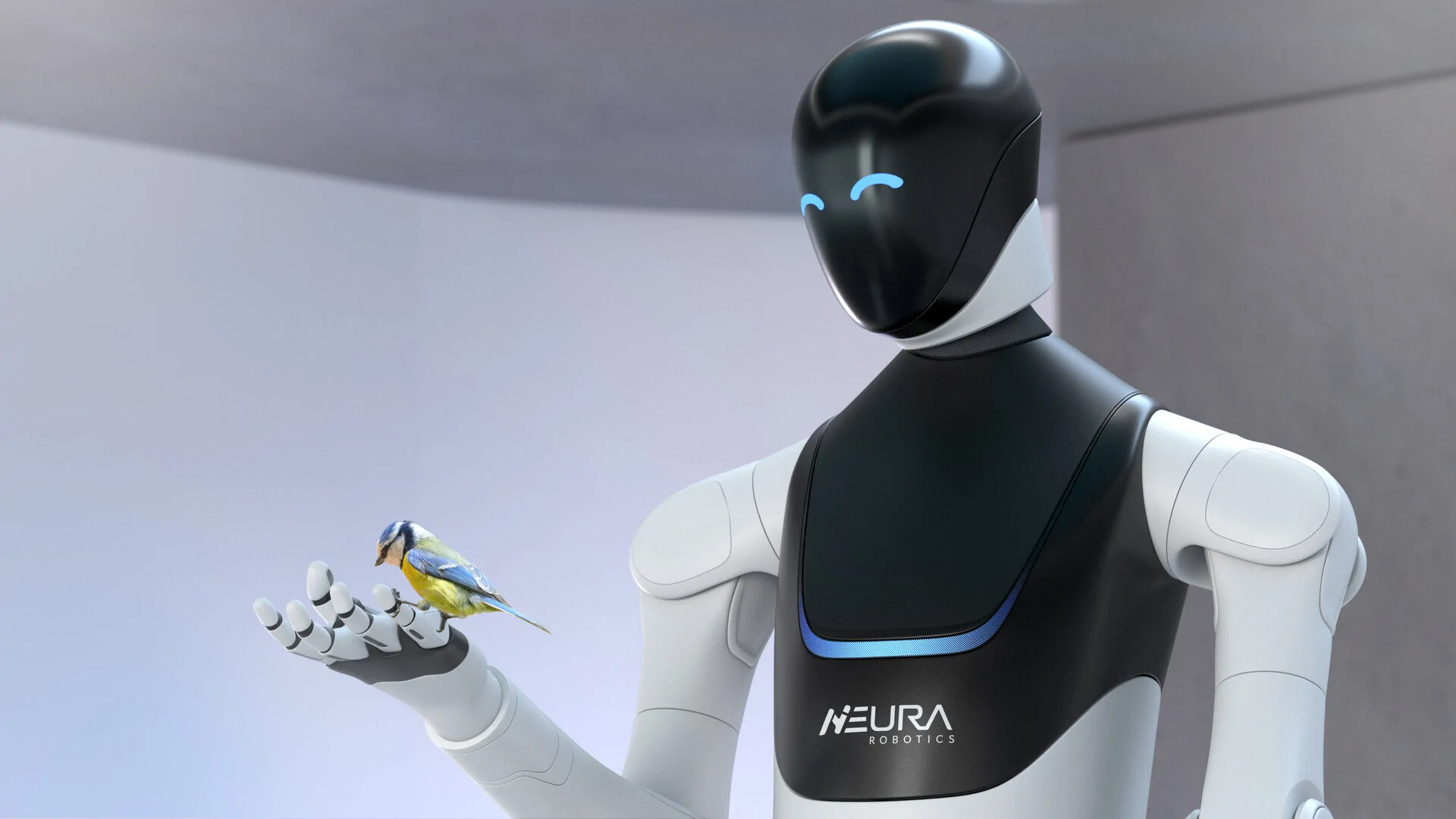

AI can help in many industry sectors, especially those requiring repetitive human actions. Recently, AI became a hot topic in robotics. Robots are not classically AI-enabled or cognitive, but that is changing. Cognitive Robots carry out numerous challenging tasks or even rescue people in dangerous situations like fires, floods, and earthquakes. Automating these risky situations would avoid unnecessary danger for people by delegating the work to robots.vSmart, multi-purpose robots could take care of the elderly and assist patients in hospitals too.

Is artificial intelligence related to machine learning in robotics? And how?

Machine learning is a subset of artificial intelligence. AI is a broader sphere, but when scientists talk about machine learning and deep learning, they are specifying some aspects of AI. With machine learning and deep learning, we develop algorithms and mathematical toolsets that are predictive and trainable and can be used to build cognitive robots.

Can you give insights to some accomplishments that you and your team have achieved for our cognitive robots?

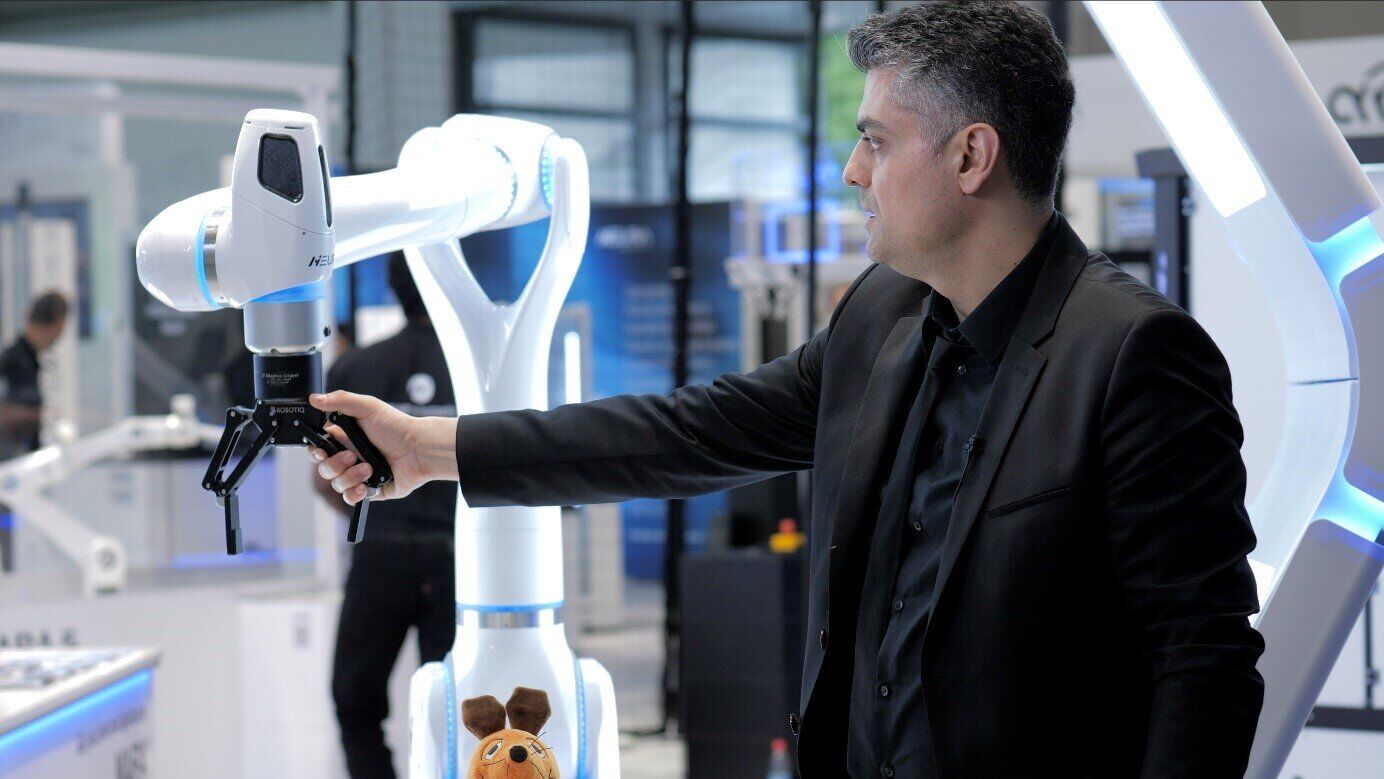

We have developed a lot in a brief period over the last three years. For example, by using a 3D camera, we can easily train the robot to recognize new items. It can also distinguish, select, and pick up objects selectively – such as glass instead of tape or a water bottle. We can train a completely customized grasp only by showing it to the robot without needing to write a single line of code. The robot can not only grab any object but can even be instructed to do so from a specific angle or point.

This is especially important. Think about the robot picking up a glass from a table: it must know how to grasp and place it correctly on the table. We are constantly developing and improving everything we have achieved. We can even run all our AI tools offline because the methods are all integrated and optimized inside the robot controller. Running everything locally on the robot ensures that the data on the robot cannot be accessed through the Internet, adding an extra level of safety.

Our robots have voice commanding and gesture control and can respond to users or even tell a joke!

What are the components of our robots that give them cognitive abilities?

The first part that makes the robot cognitive is the integrated sensors. Robots should be able to sense their surroundings, see the environment and respond when spoken to. The 3D camera, the microphone, and specific, combined technology we developed ourselves, like touchless safe human detection, is a must-have for a cognitive robot. The sensors are constantly collecting data, learning, and adapting to it, while the optimized processing software and hardware help you understand it.

What are your plans for the future of robots at NEURA Robotics?

Cloud solutions are a fantastic opportunity for our robots, and we are researching and developing in this direction. We are not waiting for others to come up with a solution in the future – we are designing our future now. We are also working on continuous learning techniques, improving them as much as possible so that our robot can be even better at learning from human actions. That is a huge step. We are working on a new generation of service robots for private individuals and industrial settings, for our offices and our homes.

How do you test the intelligence of robots? Is there a way to measure their intelligence?

There are at least two types of tests: qualitative and quantitative. If we want to test the robot’s understanding, we can talk to the robot, and it will answer. Otherwise, we use testing software to store a lot of data using machine learning and conduct. This data set divides into a training set and a test or evaluation set. With these, you test whether what you did is correct or not. While the first type of test is a qualitative test, the latter is quantitative, which means you can run multiple test programs, but you must do that correctly. We develop many test codes with any software module.

In your expert opinion, what is the future of the robotics industry with artificial intelligence?

The future holds endless possibilities. The exciting but challenging thing is that we can all have cognitive capabilities in our robots. Robots will help human workers in all fields, taking over repetitive, monotonous, or dangerous tasks. One day, robots will be as widespread as smartphones. I am sure everyone remembers when there were no phones or only a few non-smartphones, but now we cannot live without them. That is why I see a future where we cannot live without intelligent robots because they will make our lives easier. They can help us at home, in factories, hospitals, nursing homes, schools, and everywhere else. People won’t need special skills to operate and interact with robots, only abilities they already use habitually, such as talking, pointing, or explaining. We are developing robots that are versatile and can do everything. That is my vision for the robotics industry.

Read more about NEURAs technologies HERE.

Download our whitepaper about cognitive robots HERE.